An MCP server is a core component of the Model Context Protocol (MCP)—a framework built to enable AI models like large language models (LLMs) to interact with real-world tools, data sources, and reusable prompts in a standardized way. It’s a foundational part of the growing ecosystem of AI systems designed for dynamic, context-aware interaction and automation.

Rather than operating in isolation, AI assistants and AI applications using an MCP server can connect to the outside world—retrieving data, triggering actions, or using shared prompt libraries—making them far more capable and useful.

What an MCP server does

An MCP server acts as a control layer that exposes three primary functions to AI tools, agents, or models:

- Tools: Integrates external APIs, services, or connectors—allowing AI to perform tasks like sending emails, updating tickets in Slack, or querying a database.

- Resources: Offers structured and unstructured data sources—logs, files, datasets—that AI models can use in real time to inform outputs.

- Prompts: Provides templated prompts that enable a consistent and context-aware experience across common or repeatable tasks.

These features are often accessed by MCP clients using SDKs and libraries in languages like Python, and can be integrated into IDEs or developer environments.

Why MCP servers matter

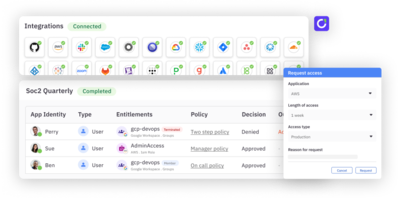

The rise of agentic AI—AI assistants and agents capable of acting autonomously—requires models to move beyond static responses. MCP servers make this possible by mediating real-time, controlled access to apps, APIs, and data.

This architecture enables safer, scalable AI applications, while supporting critical governance and security layers. Since these systems operate across sensitive business workflows, it’s essential to manage access and ensure action verification.

As adoption of open-source AI tools accelerates, the Model Context Protocol is becoming a key architectural pattern—bringing order, control, and real-world utility to increasingly intelligent and autonomous AI systems.

FAQs

Why is securing an MCP server important?

Because MCP servers connect AI models to real-world apps, data sources, and tools, they introduce new security risks—including unauthorized access, data leakage, tool poisoning, and command injection. Proper security and governance are critical to ensure trusted AI behavior, maintain integrity, and prevent misuse across connected AI applications and systems.

What are examples of security risks with MCP servers?

- Tool poisoning: Manipulating tool definitions to influence an AI model into unsafe or unintended actions.

- Data exfiltration: Unauthorized extraction of sensitive data via exposed connectors or resources.

- Server spoofing: Imitating a legitimate MCP server to trick an AI into executing malicious commands.

- Over-permissioning: Granting AI agents or assistants broad access that exceeds their task scope, increasing blast radius if compromised.

How is an MCP server different from a traditional API gateway?

While both connect systems, an MCP server is designed for AI-driven workflows. It exposes tools, resources, and prompts in formats that AI assistants can interpret in real time. Unlike a typical gateway, it includes support for short-lived access, dynamic policy enforcement, and context-aware automation—enabling AI models to reason and act securely.

Is MCP server technology vendor-specific?

The Model Context Protocol was initially introduced by Anthropic, but it’s intended to be an open, standardized way to connect AI systems to external services. The broader ecosystem is evolving, with other vendors building MCP-compatible clients, SDKs, and open-source implementations for platforms like Slack, GitHub, and others.

What types of environments deploy MCP servers?

MCP servers are typically used in real-time, action-oriented environments where AI models need to interact with external systems. Common use cases include:

- AI-integrated IDEs and developer tools

- Enterprise automation platforms

- AI assistants for customer support or ticketing

- IoT deployments leveraging real-time model input

- AI applications that rely on chaining multiple services or dynamic APIs

Does every AI system need an MCP server?

No. Only AI systems that require real-time, external interaction—such as calling APIs, accessing apps, or orchestrating workflows—benefit from MCP servers. Static or standalone models without external dependencies don’t require MCP integration.