The State of Agentic AI in Identity Security

Agentic AI is gaining ground fast, with security teams driving rapid integration into their ecosystems. What once felt like a theoretical conversation about autonomous systems has turned into an urgent, budget-backed priority for IT and security leaders across industries.

That’s one of the most striking findings from ConductorOne’s 2025 Future of Identity Security Report. The report, based on a survey of nearly 500 security professionals in the US, shows that security leaders are fully aware of the risks agentic AI brings. But rather than pump the brakes, they’re stepping on the gas.

In a world where access decisions are increasingly complex, threats are evolving by the day, and headcount can’t scale to meet demand, AI agents offer something security leaders desperately need: speed. And this year’s data shows they’re ready to embrace it.

AI agents are being adopted fast

Despite the risks, the shift to AI agents is happening rapidly. According to the report, 89% of respondents plan to implement agentic AI in their security departments within the next two years. Just 2% say they will refrain from using AI agents altogether.

And while early adopters might be expected to test the waters with low-impact use cases, that’s not the prevailing approach. Nearly all respondents (96%) said the AI agents they implement won’t be limited to non-critical tasks. That means agentic AI will be responsible for workflows where the consequences of failure are high, including identity provisioning, network security, and even decisions around access to sensitive data.

This level of adoption doesn’t mean security leaders are ignoring the risks. In fact, 83% of respondents said they are concerned about the risks posed by AI agents. 41% said they are “very concerned.” But that concern isn’t acting as a deterrent. If anything, it’s motivating teams to move quickly, but with care.

The risks are real, and leaders are paying attention

The biggest risks cited by respondents are clear. Topping the list is the threat of AI agents accessing sensitive data without proper authorization. Following close behind are concerns about agents revealing personal data inappropriately, being hijacked by third parties, or retaining long-lived access that’s difficult to track or revoke.

Interestingly, companies that have already experienced multiple identity-based incidents in the past year were more likely to express deeper concern about these risks, suggesting that firsthand experience with identity compromise is driving a more cautious and thoughtful approach to AI agent governance.

Still, the broader consensus is that risk can be managed and that the benefits of speed, automation, and scale outweigh the potential downsides.

Use cases highlight the pain and the potential

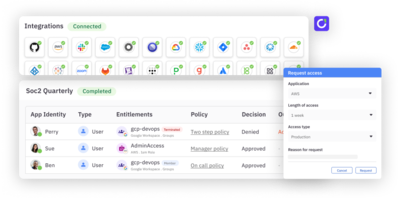

AI agents are being deployed where they can immediately relieve friction and increase operational capacity. The top three anticipated use cases cited by respondents are:

- Network monitoring and analysis (49%)

- Automating tasks in the security operations center (47%)

- Access requests and account provisioning (46%)

Other popular use cases include using agents to summarize complex information and draft scripts like SQL—areas where time savings are significant, but risk can still be reasonably contained.

These early deployments are setting the stage for more advanced implementations down the line. Once teams build trust in their AI agents, many are likely to expand the scope of what agents are authorized to do.

Security teams are building thoughtfully, not blindly

One of the most encouraging insights from the report is that security leaders aren’t rushing into AI adoption without strategy. They’re exploring a variety of implementation models to help mitigate the risks associated with autonomous systems.

Some are choosing to keep a human in the loop, ensuring that no critical decision or access grant happens without human oversight. Others are exploring dual-agent systems, where one agent completes a task and a second agent monitors or validates the output. This layered approach reflects the same principles of defense-in-depth that underpin most security programs today.

When it comes to building these agents, organizations are split in their approach. About a third plan to use corporate-provided AI models that are vetted and secured internally. But a notable 19% said they plan to start with open-source models, pointing to the need for clear guidelines around AI supply chain security as adoption accelerates.

Executive pressure is fueling the push for AI

It’s not just security teams driving the adoption of agentic AI. Executive leaders are playing a major role. 43% of respondents said their board or senior leadership team is actively pushing for greater use of AI to improve security. Another 37% said that the decision to adopt AI is being left up to the security team, but very few (just 9%) reported that their executive leadership is skeptical of AI use.

This top-down momentum is particularly strong in the financial services and technology sectors. In contrast, sectors like healthcare and manufacturing are seeing less executive involvement, possibly reflecting differing levels of organizational risk tolerance or regulatory oversight.

But overall, the direction is clear: AI is not a side project. It’s becoming a core part of the modern security stack.

Guardrails aren’t slowing down AI—they’re enabling it

The story of agentic AI in 2025 isn’t one of blind adoption or reckless acceleration. It’s about urgency with intention. The risks are real. Security teams know that. But the demands of the job—faster responses, smarter access decisions, more scalable controls—require new tools.

AI agents offer a path forward. Not because they’re perfect, but because they can operate at the scale modern identity security requires. The key is building the right frameworks to keep them in check.