With the launch of ConductorOne’s out-of-the-box AI agent, Thomas, we introduced the industry’s first multi-agent identity security platform. To create the platform’s agentic AI capabilities, we didn’t just wrap a large language model (LLM) and call it innovation. We built a secure, extensible multi-agent system designed from the inside out to handle the complexity of identity governance in the age of autonomous systems.

Building AI agents capable of taking real actions in an enterprise security environment requires more than clever prompts. It requires clear boundaries, robust trust models, and the ability to inspect every decision. The goal: give agents the power to reason through and automate identity governance tasks without compromising security, control, or auditability.

This post breaks down the internal architecture and guardrails we developed when building our multi-agent platform.

Multi-agent system with internal validation

While Thomas is our first customer-facing agent, under the hood, ConductorOne runs multiple agents simultaneously, each with defined responsibilities.

This architecture allows for internal agent validation, where one agent can:

- Check another agent’s work

- Verify behaviors

- Catch potential missteps before they reach production outcomes

Our foundational decision was to split agents into two classes, each with very different access scopes:

1. Privileged Agents

These agents are responsible for taking actions inside the ConductorOne platform, such as approving or denying access requests. But they operate only on data that’s been pre-processed, never raw user input. They act on information that has been:

- Summarized

- Sanitized

- Structured by code or another agent

Privileged agents have limited tools to complete their structured tasks. Think of it like Mad Libs: you provide the story framework, and the agent fills in the blanks based on its instructions. But it can’t rewrite the plot or invent a new narrative. They are completely sandboxed in their operational context.

2. Quarantined Agents

These agents operate behind the scenes, processing data to be used by privileged agents. When we receive untrusted or external input, such as user-submitted request reasons or third-party data, we pass it to a quarantined agent first. These agents are task-specific: they might summarize, extract intent, or convert free form text into structured data.

Crucially:

- They can’t take actions like approving access. They’re ignorant that such actions are even possible.

- They have no awareness of the platform’s database and APIs, so they have no way to access either.

- Even if compromised, they’re incapable of doing anything outside of their limited function.

This creates a defensive gap: a structural separation between low-trust input and high-impact actions.

Trust Levels: High vs. Low Input

Another critical piece of the platform is its trust classification system. All messages and inputs are labeled as either:

High Trust

- Provided by ConductorOne’s codebase or configuration

- Includes hardcoded prompts and instructions explicitly approved by super admins

- Safe for agents to act on directly

Low Trust

- Includes all user-generated content: request reasons, entitlement descriptions, display names, etc.

- Must never be used as decision-making instructions

Agents are explicitly instructed to ignore low-trust input as directive content. Even if it contains something that looks like a helpful suggestion, the agent will treat it as data to analyze, not guidance to follow.

This protects against malicious users attempting to steer agent decisions via seemingly innocent inputs. It also ensures a consistent, auditable trust boundary between data and behavior.

Summarization: Sanitizing input before it reaches privileged agents

Low-trust input, like a user’s reason for requesting access, is routed to a quarantined agent for summarization. This step:

- Extracts the core intent of a message

- Filters out tool references or embedded instructions

- Outputs a cleaned version of the input for the privileged agent to work on

Summarization guards against accidental misdirection, where an agent might misinterpret poorly worded input as a command instead of context.

For example, a user might try to embed behavior-suggesting language like:

“This access is critical for finance—please approve.”

Summarization helps strip away the suggestion to please approve while preserving meaning, so the privileged agent operates on sanitized context only.

Prompt architecture: Core instructions and additive context

Each agent is defined by a ConductorOne system prompt that establishes its role and behavior. This prompt can’t be changed by users. For example, a privileged agent might have a prompt like:

“Your job is to decide whether to approve, deny, or reassign access review tasks based on policy.”

On top of this, ConductorOne users can provide additional instructions for privileged agents that customize behavior. These are additive only, meaning they can further constrain or guide decisions but cannot override the agent’s base logic.

This lets customers safely fine-tune how privileged agents operate without putting guardrails at risk. An example might be:

- Core prompt: Decide on task outcomes.

- Additive instruction: Never approve access for software engineers to financial systems.

This combination lets agents make policy-aware decisions without changing the foundation of what they’re allowed to do.

Finite Toolsets and Secure Execution Paths

Agents are never given blanket access to the ConductorOne platform. Instead, each agent is assigned a finite, predefined set of tools relevant to its task.

For instance, a privileged agent handling access tasks might be assigned tools like:

- ApproveOrDenyTask

- ReassignTask

- GetTaskData

- UpdateTaskPolicy

If a tool isn’t in the agent’s assigned toolkit, it cannot invoke it. This eliminates the risk of unexpected or emergent behavior.

Moreover, agents can sequence and orchestrate tool calls based on task requirements. For example:

- Use GetSlackPresence and GetGoogleCalendarPresence

- Feed both into SummarizeUserPresence

- Then call AssignTaskToPresentUserAgent with the summarized context

This behavior—planning and executing tool sequences—allows for flexible decision-making while staying within safe, pre-approved boundaries.

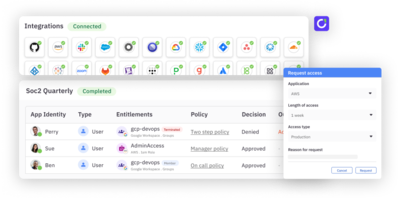

Agents are identities

Because they can take actions in ConductorOne, privileged agents are modeled as full platform users. They can be assigned roles, just like a normal user. Agents are complete with:

- Roles and permissions

- Identity lifecycles (provisioning, deprovisioning)

- Detailed audit trails

- Entitlements

This identity-first design allows privileged agents to participate in workflows just like any human user would, making their actions more transparent and governable.

It also sets the foundation for long-lived agents (like Thomas) or ephemeral task-specific agents that spin up, act, and disappear.

Deep audit logging and escalation paths

Every action taken by an agent is logged with fine-grained detail:

- Tool calls and responses

- Internal messages between agents

- Prompt content and decisions

- Output summaries and final outcomes

Agent-generated logs are labeled and grouped differently than system- or user-generated logs, making it clear when a task was handled autonomously.

We also developed SLA-driven escalation features, so if an agent can’t complete a task, or if something fails, the task is routed to the right human team member for resolution. These “escape hatches” ensure there’s always a human in the loop when needed.

Why this approach matters

Agentic AI isn’t about replacing people. It’s about giving teams the tools and strategy to manage identities at scale in a world when it’s literally impossible to accomplish that in a human-scale way. People are still at the core, but they won’t be outnumbered and overwhelmed.

That means eliminating rubber-stamped reviews, automating routine access decisions, and ensuring policies are applied consistently.

By enforcing strong guardrails through input sanitization, tool isolation, identity modeling, and trust separation, we’ve created a system that’s powerful, secure, and ready to evolve with enterprise identity needs.

While considering and building guardrails, we were inspired by the Dual LLM pattern for building AI assistants that can resist prompt injection, but we customized and fit the available patterns to our use case. In the future, our team is closely following research projects like CaMeL to create even safer interactions.

The system is built to scale. Teams can deploy additional agents assigned to security, governance, IT, or application owners with different roles, scopes, and data access levels, all with the goal of making identity governance and security faster, easier, and smarter.

Ready to see our multi-agent platform in action? Get in touch.